Artificial intelligence (AI) and machine learning technologies have struggled to gain footholds in other niches to the world of cloud computing, but Nvidia is aiming to take it to the next level and rapidly change the face of businesses over the next few years. A new trend of AI based enterprise applications is on the rise, and it is one that creates a new market from the cloud to the edge. Today, the AI inference is used in search engines, streaming video services like Netflix and by AI assistants such as Alexa. Nvidia has announced both mainstream servers designed to enable AI computing in data center and edge servers designed to enable AI at the edge powered by Nvidia GPUs and software.

In this interview during Computex 2019 with Paresh Kharya, Nvidia's director of Product Marketing, talks about Nvidia solutions in the cloud and new development in the edge. He is working with customers and partners to deliver the end-to-end solutions powered by Nvidia AI and deep learning technologies. Meanwhile, Nvidia, Amazon and Microsoft will team up to implement the technology into its software stacks for developers looking to take advantage of accelerated machine learning tasks both in the cloud and the edge.

Nvidia T4 GPU enabled mainstream servers dealing with scaling problems

The new servers are equipped with Nvidia's T4 GPUs running on the company's Turing architecture; this powerful hardware combined with Nvidia's CUDA-X AI libraries enables businesses and enterprises to more efficiently handle AI-based tasks, machine learning, data analytics, and virtual desktops. Designed for the data center, the Tesla T4 GPUs draw only 70 watts of power during operation. Companies offering the new servers include several big server brands in the beginning and now there are more than 30 server OEM/ODMs building the systems in the market.

The Nvidia T4 is a new product based on the latest Turing architecture, delivering increased efficiency on any mainstream enterprise server. However, it is not a replacement for the previous bigger and more powerful GPUs like Nvidia V100 which are designed for specialized scale-up servers for AI training and HPC. Instead, it offers good performance while consuming very little power and has a lower price tag. This approach will help rapid deployment of popular AI-enabled applications like speech AI-based customer support, cyber security, smart retail, smart manufacturing among others using previously trained machine learning models .

Nvidia EGX platform to bring low latency AI on the edge

On the other hand, with billions of IoT sensors being used by enterprises and generating huge volumes of data, there is a strong demand to process the data for business insights. Kharya mentioned that to be most useful for decision making the data needs to be processed with AI in real-time. The edge computing technology allows data produced by sensor devices to be processed closer to where it is created instead of sending it across long routes to the clouds. Edge computing complements the cloud and enables customers to do AI computing for automation even when network connection is weak or lost. Nvidia announced a scalable platform named EGX that allows enterprises to easily deploy systems to meet their AI computing needs on premises in the edge. Kharya highlights that the edge servers like those in the EGX platform will be distributed throughout the world to process data in real time from these sensors.

EGX servers start with Nvidia Jetson Nano-based micro servers, which consume only a few watts and can provide one-half trillion operations per second (TOPS) of processing for tasks such as image recognition. Kharya showcased the system board for accommodating eight camera devices in the smart traffic management solutions. For more powerful performance, EGX spans all the way to a full rack of Nvidia T4 servers, delivering more than 10,000 TOPS for real-time speech recognition and other real-time AI tasks.

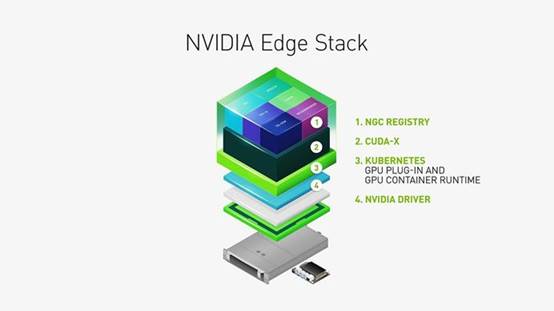

To simplify management of AI applications on distributed servers with enterprise-grade software, Nvidia released Edge Stack software suite. Nvidia Edge Stack is optimized software that provides container-based application management and includes Nvidia drivers, a CUDA Kubernetes plugin, a CUDA container runtime, CUDA-X libraries and containerized AI frameworks and applications, including TensorRT, TensorRT Inference Server and DeepStream. Meanwhile, Nvidia has partnered with Red Hat to integrate and optimize Edge Stack with OpenShift.

The NGC-ready Validation program

Featuring Nvidia T4 or V100 GPUs and fine-tuned to run Nvidia CUDA-X AI acceleration libraries, these servers create a new class of enterprise servers for AI and data analytics with greater utility and versatility. For expanding the places users of powerful systems with Nvidia GPUs can deploy GPU-accelerated software with confidence, Nvidia setup NGC-Ready server validation program. It includes AI and HPC software tested as part of the NGC-Ready validation process. Nvidia NGC provides a bunch of GPU-accelerated software, pre-trained AI models, model training for data analytics, machine learning, deep learning and high-performance computing accelerated by CUDA-X AI.

The NGC-Ready program features a select set of systems powered by Nvidia GPUs with Tensor Cores that are ideal for a wide range of AI workloads. The same approach will apply to the edge computing solutions. Nvidia Edge Stack is optimized for certified servers and downloadable from the Nvidia NGC registry. For businesses interested in the deployment of applications from edge to the cloud, customers will be able to connect to AWS Greengrass or Microsoft Azure IoT cloud platforms, allowing for easy scalability depending on the required tasks.

Nvidia EGX edge stack

Nvidia EGX computing platform from Nano to T4

DIGITIMES' editorial team was not involved in the creation or production of this content. Companies looking to contribute commercial news or press releases are welcome to contact us.