The emergence of ChatGPT in late 2022 and early 2023 has promoted a new wave of AI trends. The semiconductor sector has received some of the most attention amidst this trend. This is because not only can AI bring massive business opportunities but the entire semiconductor industry, especially chip design, can also achieve further breakthroughs with the assistance of AI.

Aart de Geus, chairman and CEO of Electronic Design Automation (EDA) tools manufacturer Synopsys, believes that AI is indeed an important trend that can change the entire semiconductor industry, even the world. However, before we can achieve this vision, there are still many challenges we need to overcome beyond just the technology itself, including talent, energy, and geopolitics.

The following is a summary of Aart de Geus's interview with DIGITIMES:

Q: What changes will the AI trend bring to EDA tools?

A: Going back about 12 years, at that time, there were many questions on what is the next growth direction for the semiconductor industry? I predicted at that time that it would be "Smart Everything." My conclusion was that if Smart Everything happens, it would have a very big impact on the semiconductor industry. This is because to make everything smart, the computation need will be massive, which in turn will generate a large chip demand. Of course, no one could predict how big the scale would eventually grow.

It's worth noting that even back then, machine learning (ML) was already starting to be visible as a direction for technology development. Even before the current emergence of generative AI, Synopsys was already investing in the ML sector and upgrading development tools. Because ML was quite the trend back then, plenty of manpower was invested in related technologies.

Fast forward to about five years ago, Synopsys started to invest further in the AI and automated design sectors. By early 2021, we have the first proof of a customer who was able to use our AI tools to tape out a major circuit and successfully produce it.

Within Synopsys' AI tools, there was a key feature called DSO (Design Space Optimization). Chip products that went through DSO had better computing power, lower power consumption, and in some cases, smaller size. The most important part was that it could massively reduce product development time, completing the design flow in about a third of the time (three months to one month).

By early 2023, Synopsys' AI tools have achieved over 100 tape-outs in automated designs. By the middle of the year, that figure has rapidly grown to 230, which is a staggering growth speed.

All in all, Synopsys adopted AI technology much earlier than the current generative AI boom. Therefore, it already has a certain level of advantage regarding this technology.

The strength of the generative AI we see now is that it can take enormous amounts of different information and create interesting and valuable content. However, on many occasions, it may also produce completely erroneous content. In the field of EDA tools, we have no room for error. If a single transistor doesn't work, nothing works.

This is not to be negative on generative AI. I do think what we see here is a fantastic application. We are already adopting some generative AI technology inside Synopsys. However, its extent of use in EDA tools is not very large yet.

Q: It appears that you believe the next generation of EDA tools will develop toward the "automation of automation" direction. Will this direction solve the semiconductor talent shortage issue within 5-10 years?

A: Exactly 37 years ago, Synopsys invented the so-called synthesizer. When we first brought it to the users, about 2% did say that this is "taking their job away," but 98% welcomed the convenience brought by new tools, as they could become more efficient and do so much more.

Now, 35 or so years later, this is the exact same situation. When automated tools become more powerful, what human intelligence needs to look at is, "What more can I do with these new tools?" As we reduce the amount of drudgery, how can we be more creative in designing new architectures and systems?

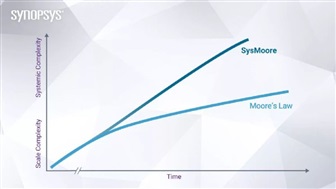

A few years ago, I mentioned that we're moving from the era of Moore's Law and entering what I like to call the "SysMoore" law. SysMoore is taking the long-time notion of Moore's Law and combining it with the systematic complexity of new innovative technology. The previous Moore's law emphasizes exponential growth in scale. The SysMoore law emphasizes systemic complex growth.

Photo: The previous Moore's law emphasizes exponential growth in scale. The SysMoore law emphasizes systemic complex growth. Credit: Synopsys

For example, as electrification and smartification get implemented, the functions and structures of car systems are becoming more and more complex. One chip no longer solves all the problems. It requires many different engineering and professional knowledge, which resulted in the engineering demand for semiconductors going up, hence the talent shortage.

AI can certainly release more potential for humans, allowing us to think about what more can be done. Most people, when they come across a useful tool, they're going to be pretty happy because their job has just become easier. They wouldn't say things like, "This tool took my job, I have nothing to do now."

Now, chip design tools are different from regular tools. You need to have a good education and knowledge to use these tools. Therefore, the amount of education resources invested will affect the size of the talent pool. Simultaneously, the economic return, e.g. how much you get paid for the jobs, will also have an effect.

Some countries in the '90s departed from engineering. During that era, everybody wants to be either a finance person or a lawyer. Right now, jobs like engineering have at least returned to society's mainstream, and we can absolutely see that there's more interest from universities. However, no matter what field you're in, the core ability required is to be able to handle complex tasks and figure out a way to solve problems.

When I talk to engineering graduates, I always tell them that while you can be a deep engineer in your field of expertise, knowing fields that are right adjacent will be very valuable. Take cars for example. Hardware and software are now equally important. Besides chip IC, engineers also need to understand thermal, mechanics, and even reliability. These are all different domains. Having a broader perspective is something all humans need to possess.

Q: Due to a push from AI, Application Specific Integrated Circuit (ASIC) has become a very popular option these years. What's your view on this new ASIC trend? Will more and more companies adopt this model?

A: Regardless of ASIC or the standard market, I believe the visible trend in the future is that we're going to see more and more inter-industrial collaboration of companies that bring different skills together to build a good solution. This is ultimately what's happening with autonomous driving development right now

The same development will occur in the AI sector too. We can already see that some companies that specialize in AI algorithms are working together with chip design houses to develop faster and better-performing chips. They're also working together with cloud service providers to multiply the computation scale and availability. This is also why I believe that in the AI sector, the understanding of system complexity is as important as specialties in each technology.

Another component that I like to throw in is that the trend Moore's Law was predicated on, which is making things smaller, making more of them, and doing it cheaper, is very much still evolving. However, because this trend has slowed down, people's attention has gradually shifted to making different chiplets on the same chip operate more efficiently together.

To achieve this, the signal transmission speed between chips needs to be fast enough. It may require even more complex production technology, which will greatly increase design complexity. While some companies can handle all the functions in an IC themselves, some products have tech from multiple design companies, which requires tight teamwork.

This goes back to the importance of system complexity I previously mentioned. As systems become more complex, companies will need a whole different level of collaboration and cooperation. The success of a product is no longer just up to one individual company. Also, the intersection between hardware and software is becoming much more interwoven. As a result, cross-sector interactions and collaborations will increase.

Q: In future AI business opportunities, what key traits do semiconductor corporations need to have to achieve success?

A: That's a very complex question, but it basically comes down to two key factors. The first is to be good at what you do. To do so, you cannot ignore the development trends in the tech industry. No matter what you do, you need to keep up with the constant changes in the market.

Past experiences have taught us that the high-tech sector has a characteristic, which is that one or two key changes will determine what happens in the next generation. People have asked me why Synopsys has been successful for so many years, and one of the reasons is that we never fell behind the overall semiconductor industry as it grew exponentially. We're always at the cutting edge of technology.

The second factor, which I think has become more important than the first one, is that you need to be really good for your partners. In other words, don't think of yourself as just a supplier or a client. Don't interact with your partners only from a cost-effectiveness perspective. You need to think about what you can do to help your partner company create greater differentiation and advantages.

There's nothing wrong with reducing costs, but increasing differentiation is even better. This is also where AI technology can come in. It's not only very good at reducing costs but it can also create greater differentiation and unique advantages.