In 2012 Google set off a big bang in the artificial intelligence (AI) community and brought deep learning into the eye of the public when it announced that Andrew Ng and the Google Brain project had taught an army of servers how to recognize cats simply by showing them hours and hours of YouTube videos. This was a milestone in the AI industry in that Deep Neural Networks (DNNs) were used for machine learning and it has revolutionized artificial intelligence and the analysis of big data, dramatically influencing how the challenges of applications such as voice recognition and translation, medical analysis and self-driving automobiles are being approached. In addition, it has introduced a new computing model to the market, a model that quickly placed NVIDIA front and center in the deep learning community and which is now starting to deliver what NVIDIA CEO Jen-Hsun Huang characterizes as superhuman results for the company.

In its most recent quarterly results, NVIDIA announced record datacenter revenues of US$143 million, up 63% year on year (compared with 9% growth YoY for Intel) and up 47% sequentially, reflecting the enormous growth in deep learning.

Hyperscale companies have been the fastest adopters since NVIDIA first began being involved in deep learning three years ago. NVIDIA GPUs today accelerate every major deep learning framework in the world. NVIDIA powers IBM Watson and Facebook's Big Sur server for AI, and the company's GPUs are in AI platforms at hyperscale giants such as Microsoft, Amazon, Alibaba and Baidu for both training and real-time inference. Twitter has recently said they use NVIDIA GPUs to help users discover the right content among the millions of images and videos shared every day.

And now with the launch of the NVIDIA DGX-1, the world's first purpose-built system for deep learning and based on NVIDIA's ambitious new Pascal GPU architecture, the company expects increased deep learning adoption within academia and the hyperscale landscape, as well as growing deployment among large enterprises.

Neural networks

When Ng approached machine learning with the Google Brain project, he saw that traditional coding, i.e. writing if-then statements to classify every feature of every object in every image would be too labor intensive for Google's massive video, image, and voice datasets. Instead, Ng used an A.I. algorithm called a Deep Neural Network (DNN) which works by training the computer program with data, without having to write traditional computer code.

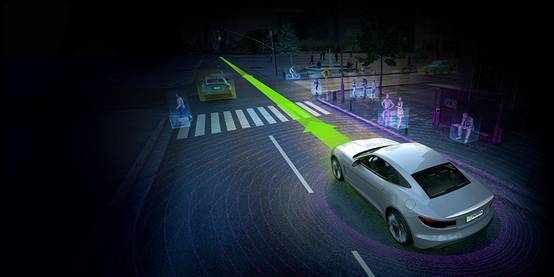

For neural networks, rather than having to write code to identify what a self-driving car is seeing on the road, or identify what an x-ray image is, researchers implement a framework and then feed it a lot of untagged data - in this case, 10 million YouTube video frames. The network transforms this input data by calculating a weighted sum over the inputs and applies a non-linear function to this transformation to calculate an intermediate state. The combination of these steps is called a neuron layer, and through repetition of these steps, the artificial neural network learns multiple layers of non-linear features, which it then combines in a final layer to create a prediction.

However, one main issue with neural networks is that they are computationally greedy, and can thus be quite expensive if they involve too many neurons. Up to that point, most had been designed having 1-10 million connections. Ng wanted to utilize over one billion connections. But while he rethought the software side of the equation by using a DNN to make it more efficient, Ng stuck with a traditional approach on the hardware and simply leveraged 2,000 Google servers (16,000 cores and using 600,000 watts) from its datacenter to create a distributed computing infrastructure for training the neural network, at a cost of millions of dollars.

It did not take long for researchers to realize that since neural networks at their core are based on matrix math (non-linear) and floating math, they were inherently well suited to be processed by GPUs, which can have up to 3500 parallel CUDA cores. But researchers were amazed at how well-suited GPUs were. A follow-up experiment was performed using a combination of GPUs and off-the-shelf hardware and the results of Google's neural network training experiment were achieved with just three commodity servers using 12 GPUs.

This was a key turning point for deep learning, setting off dramatic growth in the market and perhaps more importantly, democratizing deep learning. There are only a handful of companies in the world that can dedicate 2,000 servers and 600,000 watts of power to a project but to get three servers at a cost of around US$50,000 is affordable enough for any research center at any division of a large company. And customers quickly started housing GPU-based solutions at data centers or plugging makeshift solutions into wall sockets at their offices. Even at the basic level, a single user can buy a GeForce to get going on deep learning or users can even just start accessing GPUs in the cloud.

GPUs are not only cost efficient but they also allow researchers to be more productive. As previously mentioned, deep neural networks need to be trained by inputting hundreds of thousands, if not millions of images. This training takes time. For one image-recognition neural network called AlexNet, it took an NVIDIA TITAN X (that sells for less than US$1000) less than three days to train the model using the 1.2 million image ImageNet dataset, compared with over 40 days for a 16-core CPU.

That was in 2015, and the results did not satisfy Jen-Hsun Huang, who challenged the company's engineering team to come up with a solution that could have AlexNet trained an order of magnitude faster. And while we all know that Moore's law doesn't improve performance 10x in one year, at this year's NVIDIA GTC (GPU Technology Conference) the DGX 1 was able to train AlexNet in only two hours. This also highlights Huang's commitment to achieving a post-Moore's Law era of computing acceleration, whereby new models are implemented in order to create productivity and efficiency gains. GPU computing is not only a major new computing model but one that is now going mainstream.

And it is not just hyperscale firms like Google and Baidu that are taking advantage of deep learning. For example, there are now 19 different automotive companies that have research labs in Silicon Valley. In the medical imaging industry, every big company has big data projects of some kind or another and they are all seeking out NVIDIA. In 2015 alone, the company spoke with 3500 customers to talk about deep learning projects. Interest spans all different industries.

The reason NVIDIA is being sought out is that its GPUs are really the ideal processor for the massively parallel problems tackled with deep learning, and the company has optimized its entire stack of platforms, from the architecture to the design, to the system, to the middleware, to the system software, all the way to the work that its does with developers all over the world, so that it can optimize the entire experience to deliver the best performance.

For example, the recently launched DGX-1 is the first system designed specifically for deep learning - it comes fully integrated with hardware, deep learning software and development tools for quick, easy deployment.

The turnkey system is built on eight NVIDIA Tesla P100 GPUs based on the Pascal GPU architecture. And Pascal is really the first GPU platform that was designed from the ground up for applications such as deep learning that are well beyond computer graphics. Each Tesla P100 GPU is partnered with 16GB memory and the system delivers up to 170 teraflops of half-precision (FP16) peak performance, providing the throughput of 250 CPU-based servers.

The DGX-1 features other breakthrough technologies that maximize performance and ease of use, including NVIDIA NVLink high-speed interconnect for maximum application scalability. The GPUs are manufactured using 16nm FinFET fabrication technology and are the largest, most powerful 16nm chip ever built, delivering 15.3 billion transistors on a 600mm2 chip for unprecedented energy efficiency.

Key research centers around the world will be receiving the first servers this month, with the customer list including a number of universities such as Stanford, Berkeley, NYU, the University of Toronto and Hong Kong University. Massachusetts General Hospital will also be among the first to get a DGX-1. The hospital launched an initiative that applies AI techniques to improving the detection, diagnosis, treatment, and management of diseases, drawing on its database of some 10 billion medical images. This is the kind of research that the DGX-1 was designed to support.

As a turnkey solution the NVIDIA DGX-1 also features a comprehensive deep learning software suite, including NVIDIA Deep Learning GPU Training System (DIGITS), the newly released NVIDIA CUDA Deep Neural Network library (cuDNN) version 5, and a GPU-accelerated library of primitives for designing DNNs. It also includes optimized versions of several widely used deep learning frameworks - Caffe, Theano and Torch.

Over the past three years, NVIDIA has seen how quickly the technology has changed in the deep learning community, including with the software. Most of the software used is open source, and while hyperscale companies like Baidu and Google may be very comfortable working in an open source, the same cannot be said for more traditional companies like high-end automotive firms or hospitals. Therefore, NVIDIA will provide services for maintaining and patching the open source software for the DGX-1. A system box can be installed in a data center and connected to NVIDIA's cloud. There, the customers can find their deep learning software and download the latest optimized versions, with NVIDIA patching any bugs that come up. And all the while, all of their data remains secure and never leaves their data center

When it comes to deep learning, NVIDIA is seeing deployments not just in one or two customers but basically in every single hyperscale datacenter in the world in every single country. Jen-Hsun Huang thinks this is a very, very big deal and that it is not just a short-term phenomenon. The amount of data that the world processes is just going to keep on growing.

For neural networks, researchers do not write code to identify what a self-driving car sees on the road, or identify what an x-ray image is; instead they implement a framework and then feed it a lot of untagged data.